Semantic Alignment of LiDAR Data at City Scale

Computer Vision and Pattern Recognition (CVPR), June 2015

Abstract

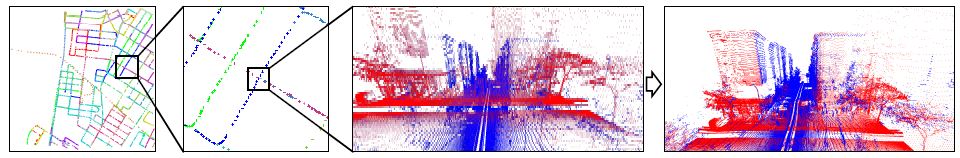

This paper describes an automatic algorithm for global alignment of LiDAR data collected with Google Street View cars in urban environments. The problem is challenging because global pose estimation techniques (GPS) do not work well in city environments with tall buildings, and local tracking techniques (integration of inertial sensors, structure-from-motion, etc.) provide solutions that drift over long ranges, leading to solutions where data collected over wide ranges is warped and misaligned by many meters. Our approach to address this problem is to extract "semantic features" with object detectors (e.g., for facades, poles, cars, etc.) that can be matched robustly at different scales, and thus are selected for different iterations of an ICP algorithm. We have implemented an all-to-all, non-rigid, global alignment based on this idea that provides better results than alternatives during experiments with data from large regions of New York, San Francisco, Paris, and Rome.

Paper

Citation

Fisher Yu, Jianxiong Xiao, and Thomas Funkhouser.

"Semantic Alignment of LiDAR Data at City Scale."

Computer Vision and Pattern Recognition (CVPR), June 2015.

BibTeX

@article{Yu:2015:SAO,

author = "Fisher Yu and Jianxiong Xiao and Thomas Funkhouser",

title = "Semantic Alignment of {LiDAR} Data at City Scale",

journal = "Computer Vision and Pattern Recognition (CVPR)",

year = "2015",

month = jun

}